Review system components for Unicode-readiness

Assess your system components for supporting Indigenous Languages. Find out what systems components are likely to be Unicode-ready and what to consider when using various components.

On this page:

- Unicode ready systems

- Databases and Unicode

- System programming

- Mainframe systems

- Web servers

- File format

- Identify problematic string operations

Unicode-ready systems

The following table outlines some characteristics of Unicode-ready IM/IT systems:

| Characteristic | Likely Unicode-Ready | NOT likely Unicode-Ready |

|---|---|---|

| Programming language used | Java version18; Python 3.x ; JavaScript | C; C++; Python 2; PHP |

| Database encoding | UTF-8; UTF-16; UTF-32 | iso-8859-1(Latin1); windows-1252 (Western European); ASCII |

| String handling (system queries, searching, sorting, etc.) | Strings treated as sequence of bytes, not individual characters; Extra space allocated for string variables and database columns | Strings treated as sequences of characters; Possibly needing to know length of string in characters; What is the nth character, etc.; No extra space allocated for string variables and database columns. |

| Data edit rules | Program logic does not restrict inputs to a specific set or range of characters. | Program logic restricts inputs to a specific set or range of characters; Program logic restricts inputs to a specific set or range of characters. |

| Web server and associated files | Web server configuration file (e.g., httpd.conf) has a directive recognizing Unicode, or individual pages have a UTF-8 meta tag. | Web server configuration file (e.g., httpd.conf) does not have a directive recognizing Unicode, and individual pages don’t have a UTF-8 meta tag. |

Databases and Unicode

When building a database, the character set and encoding combination must be specified for data storage. How this encoding is identified differs by database. The following table lists the acceptable Unicode character set encodings for several popular database management systems. These are all UTF-8 encodings.

UTF-8 is the preferred encoding for Unicode data as it is very space efficient – with UTF-8, ASCII characters (letters, numbers, many punctuation characters) use just one byte each to store. A Unicode database that contains just ASCII characters will consume the same amount of space as an ASCII database.

| Database | Unicode encoding |

|---|---|

| Oracle | AL32UTF8 |

| PostgreSQL | UTF8 (character_repertoire = UCS) |

| SQL Server | ends in ‘_utf8’ |

| MySQL | utf8mb4 |

| MongoDB | UTF8 (This is standard for MongoDB) |

Review code samples and instructions on how to determine and set the encoding for a database.

Collation rules determine how characters are sorted and strings are compared, impacting factors like case sensitivity, accent relevance and character arrangement in sorting. Different languages often have specific collations; for instance, French and English differ as described in the Oracle Database Globalization and Support Guide). Binary comparison and sorting, which rely on binary encodings, are frequently used. Binary comparison and sorting are recommended for databases that store (either now or in the future) Indigenous language characters.

The following table lists the preferred collation setting for some common database management systems:

| Database | Collation |

|---|---|

| Oracle | BINARY |

| PostgreSQL | ucs_basic |

| SQL Server | ends in ‘_utf8’ |

| MySQL | starts with ‘utf8mb4_’ (e.g., utf8mb4_0900_ai_ci) |

| MongoDB | UTF8 (This is standard for MongoDB) |

Enabling the data search function in an application involves more than just setting the collation rules correctly. You must also provide a way for the person using the application to specify the text to be searched. This is more complicated when the text includes characters not found on the US ASCII keyboard. The First Voices program provides keyboards for enabling search, and smart phone apps for Apple and Android.

Review code samples and instructions for determining the sorting and matching collations used for character data in various database management systems.

System programming

Computer programs will likely need to be modified to support Unicode text if they perform operations like:

- Sorting

- Searching

- Matching

Programs written to support strings with byte-sized constituent characters will produce errors when dealing with multi-byte, varying-length Unicode text.

For Indigenous languages, what might look like single characters are often compositions of multiple Unicode characters. For example, the symbol é is made up of two Unicode characters:

- The Latin letter e

- An overlaid acute accent

These compositions are called graphemes. To support the processing of Indigenous language text, programs must be able to segment the text into graphemes. No common programming language has built-in support for graphemes. Libraries for doing this are available in most common programming languages.

Mainframe systems

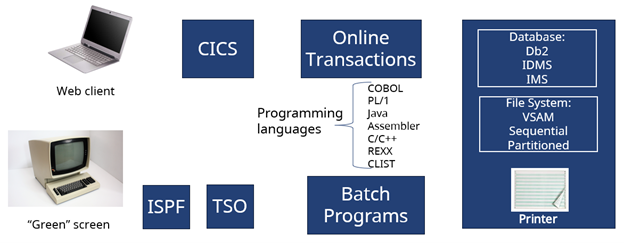

Mainframe systems deserve special coverage, as their components may or may not support Unicode. The following diagram illustrates some of the components present in mainframe systems:

Some components were designed around a specific non-Unicode character set, EBCDIC, and do not work with any other character set. This includes:

- Green screen terminals (IBM 3270 terminals and emulators)

- Mainframe printers

The other components, while originally designed for use with EBCDIC, are capable of supporting Unicode.

Web servers

Web servers can be configured to serve web pages that have Unicode content. In Apache web servers, this configuration can be done by adding the following line to the httpd.conf configuration file.

‘AddDefaultCharset utf-8’

When configured this way, a page with the following html will appear, correctly, as Tk’emlúps te Secwe̓pemc.

<html><body>

<h1>Tk’emlúps te Secwe̓pemc </h1>

</body></html>

If that line is missing from the configuration file, then the same page might appear like Tk’emlúps te Secwe̓pemc.

Regardless of how the web server is configured, Unicode support can be ensured by including a directive in the web page itself. For example, the following page will render properly even if the web server has not been configured for Unicode support, since the web page includes a meta charset directive:

<html><body>

<meta charset="UTF-8">

<h1>Tk’emlúps te Secwe̓pemc </h1>

</meta>

</body></html>

File format

Data stored in files must be encoded in UTF-8. Specific guidance depends on the format of the file:

- CSV: Comma-separated value (CSV) files must include a byte order mark (BOM) at the start of the file to render properly in Excel.

- DBF: The dBase File Format (DBF) is a file format used by older desktop databases such as dBase, Clipper and FoxPro. It is also used to store non-spatial data in Esri shapefiles. Originally designed to store ASCII data, in more recent versions the default encoding was changed to ISO-8859-1. DBF files can store Unicode data encoded as UTF-8, but this is not the default.

- PDF: UTF-8 / BC Sans Microsoft Office documents converted to PDF through Microsoft Office will retain their UTF-8 encoding, and the BC Sans font will be embedded, allowing users to view the document even if they don’t have BC Sans installed on their system. No extra action is required.

- Microsoft Office: Unicode UTF-8 is the default encoding used in Microsoft Office products (e.g., Word, Excel, PowerPoint); no extra action is required.

Identify problematic string operations

Once you've ensured your databases, programming languages and web servers are able to support Unicode, the next step is to identify potentially problematic system string operations.